Content from Basic Queries

Last updated on 2025-12-12 | Edit this page

Overview

Questions

- How can we select and download the data we want from the Gaia server?

Objectives

- Compose a basic query in ADQL/SQL.

- Use queries to explore a database and its tables.

- Use queries to download data.

- Develop, test, and debug a query incrementally.

As a running example, we will replicate parts of the analysis in the paper, “Off the beaten path: Gaia reveals GD-1 stars outside of the main stream” by Adrian Price-Whelan and Ana Bonaca.

Outline

This episode demonstrates the steps for selecting and downloading data from the Gaia Database:

First we will make a connection to the Gaia server,

We will explore information about the database and the tables it contains,

We will write a query and send it to the server, and finally

We will download the response from the server.

Query Language

In order to select data from a database, you need to compose a query, which is a program written in a “query language”. The query language we will use is ADQL, which stands for “Astronomical Data Query Language”.

ADQL is a dialect of SQL (Structured Query Language), which is by far the most commonly used query language. Almost everything you will learn about ADQL also works in SQL.

The reference manual for ADQL is here. But you might find it easier to learn from this ADQL Cookbook.

Using Jupyter

If you have not worked with Jupyter notebooks before, you might start with the tutorial from Jupyter.org called “Try Classic Notebook”, or this tutorial from DataQuest.

There are two environments you can use to write and run notebooks:

“Jupyter Notebook” is the original, and

“Jupyter Lab” is a newer environment with more features.

For this lesson, you can use either one.

Here are the most important things to know about running a Jupyter notebook:

Notebooks are made up of code cells and text cells (and a few other less common kinds). Code cells contain code; text cells contain explanatory text written in Markdown.

To run a code cell, click the cell to select it and press Shift-Enter. The output of the code should appear below the cell.

In general, notebooks only run correctly if you run every code cell in order from top to bottom. If you run cells out of order, you are likely to get errors.

You can modify existing cells, but then you have to run them again to see the effect.

You can add new cells, but you need to be careful about the order you run them in.

If you have added or modified cells, and the behavior of the notebook seems strange, you can restart the “kernel”, which clears all of the variables and functions you have defined, and run the cells again from the beginning.

If you are using Jupyter Notebook, open the

Kernelmenu and select “Restart and Run All”.In Jupyter Lab, open the

Kernelmenu and select “Restart Kernel and Run All Cells”.

Before you continue with this lesson, you might want to explore the other menus and the toolbar to see what else you can do.

Connecting to Gaia

The library we will use to get Gaia data is Astroquery.

Astroquery provides Gaia, which is an object

that represents a connection to the Gaia database.

We can connect to the Gaia database like this:

Old versions of astroquery output

if you are using a version of astroquery that’s older than v0.4.4, you may see this output

OUTPUT

Created TAP+ (v1.2.1) - Connection:

Host: gea.esac.esa.int

Use HTTPS: True

Port: 443

SSL Port: 443

Created TAP+ (v1.2.1) - Connection:

Host: geadata.esac.esa.int

Use HTTPS: True

Port: 443

SSL Port: 443This import statement creates a TAP+ connection; TAP stands for “Table Access Protocol”, which is a network protocol for sending queries to the database and getting back the results.

Databases and Tables

What is a database? Most generally, it can be any collection of data, but when we are talking about ADQL or SQL:

A database is a collection of one or more named tables.

Each table is a 2-D array with one or more named columns of data.

We can use Gaia.load_tables to get the names of the

tables in the Gaia database. With the option

only_names=True, it loads information about the tables,

called “metadata”, but not the data itself.

OUTPUT

INFO: Retrieving tables... [astroquery.utils.tap.core]

INFO: Parsing tables... [astroquery.utils.tap.core]

INFO: Done. [astroquery.utils.tap.core]The following for loop prints the names of the

tables.

OUTPUT

external.apassdr9

external.gaiadr2_geometric_distance

external.gaiaedr3_distance

external.galex_ais

external.ravedr5_com

external.ravedr5_dr5

external.ravedr5_gra

external.ravedr5_on

external.sdssdr13_photoprimary

external.skymapperdr1_master

external.skymapperdr2_master

[Output truncated]So that is a lot of tables. The ones we will use are:

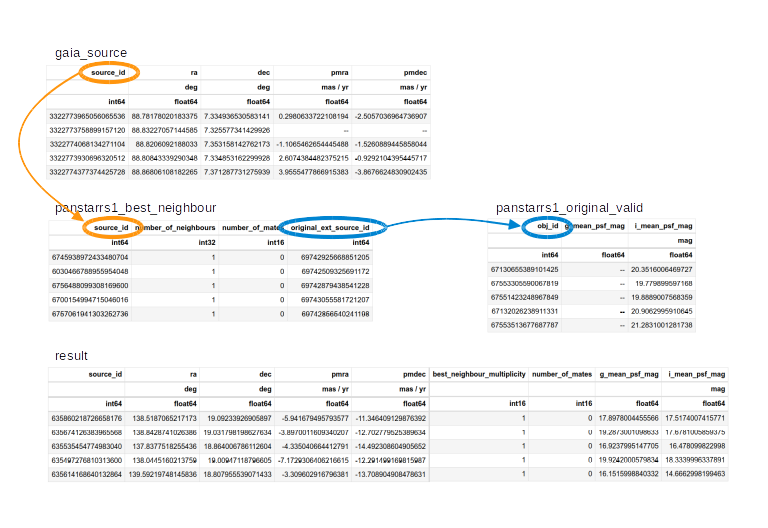

gaiadr2.gaia_source, which contains Gaia data from data release 2,gaiadr2.panstarrs1_original_valid, which contains the photometry data we will use from PanSTARRS, andgaiadr2.panstarrs1_best_neighbour, which we will use to cross-match each star observed by Gaia with the same star observed by PanSTARRS.

We can use load_table (not load_tables) to

get the metadata for a single table. The name of this function is

misleading, because it only downloads metadata, not the contents of the

table.

OUTPUT

Retrieving table 'gaiadr2.gaia_source'

Parsing table 'gaiadr2.gaia_source'...

Done.

<astroquery.utils.tap.model.taptable.TapTableMeta at 0x7f50edd2aeb0>Jupyter shows that the result is an object of type

TapTableMeta, but it does not display the contents.

To see the metadata, we have to print the object.

OUTPUT

TAP Table name: gaiadr2.gaiadr2.gaia_source

Description: This table has an entry for every Gaia observed source as listed in the

Main Database accumulating catalogue version from which the catalogue

release has been generated. It contains the basic source parameters,

that is only final data (no epoch data) and no spectra (neither final

nor epoch).

Size (bytes): 4906520690688

Num. columns: 96Columns

The following loop prints the names of the columns in the table.

OUTPUT

solution_id

designation

source_id

random_index

ref_epoch

ra

ra_error

dec

dec_error

parallax

parallax_error

[Output truncated]You can probably infer what many of these columns are by looking at the names, but you should resist the temptation to guess. To find out what the columns mean, read the documentation.

Exercise (2 minutes)

One of the other tables we will use is

gaiadr2.panstarrs1_original_valid. Use

load_table to get the metadata for this table. How many

columns are there and what are their names?

PYTHON

panstarrs_metadata = Gaia.load_table('gaiadr2.panstarrs1_original_valid')

print(panstarrs_metadata)OUTPUT

Retrieving table 'gaiadr2.panstarrs1_original_valid'

TAP Table name: gaiadr2.gaiadr2.panstarrs1_original_valid

Description: The Panoramic Survey Telescope and Rapid Response System (Pan-STARRS) is

a system for wide-field astronomical imaging developed and operated by

the Institute for Astronomy at the University of Hawaii. Pan-STARRS1

[Output truncated]

Catalogue curator:

SSDC - ASI Space Science Data Center

https://www.ssdc.asi.it/

Size (bytes): 933802426368

Num. columns: 26OUTPUT

obj_name

obj_id

ra

dec

ra_error

dec_error

epoch_mean

g_mean_psf_mag

g_mean_psf_mag_error

g_flags

r_mean_psf_mag

r_mean_psf_mag_error

r_flags

i_mean_psf_mag

i_mean_psf_mag_error

i_flags

z_mean_psf_mag

z_mean_psf_mag_error

z_flags

y_mean_psf_mag

y_mean_psf_mag_error

y_flags

n_detections

zone_id

obj_info_flag

quality_flagWriting queries

You might be wondering how we download these tables. With tables this big, you generally don’t. Instead, you use queries to select only the data you want.

A query is a program written in a query language like SQL. For the Gaia database, the query language is a dialect of SQL called ADQL.

Here’s an example of an ADQL query.

Triple-quotes strings

We use a triple-quoted string here so we can include line breaks in the query, which makes it easier to read.

The words in uppercase are ADQL keywords:

SELECTindicates that we are selecting data (as opposed to adding or modifying data).TOPindicates that we only want the first 10 rows of the table, which is useful for testing a query before asking for all of the data.FROMspecifies which table we want data from.

The third line is a list of column names, indicating which columns we want.

In this example, the keywords are capitalized and the column names are lowercase. This is a common style, but it is not required. ADQL and SQL are not case-sensitive.

Also, the query is broken into multiple lines to make it more readable. This is a common style, but not required. Line breaks don’t affect the behavior of the query.

To run this query, we use the Gaia object, which

represents our connection to the Gaia database, and invoke

launch_job:

OUTPUT

<astroquery.utils.tap.model.job.Job at 0x7f50edd2adc0>The result is an object that represents the job running on a Gaia server.

If you print it, it displays metadata for the forthcoming results.

OUTPUT

<Table length=10>

name dtype unit description n_bad

--------- ------- ---- ------------------------------------------------------------------ -----

source_id int64 Unique source identifier (unique within a particular Data Release) 0

ra float64 deg Right ascension 0

dec float64 deg Declination 0

parallax float64 mas Parallax 2

Jobid: None

Phase: COMPLETED

Owner: None

Output file: sync_20210315090602.xml.gz

[Output truncated]Don’t worry about Results: None. That does not actually

mean there are no results.

However, Phase: COMPLETED indicates that the job is

complete, so we can get the results like this:

OUTPUT

astropy.table.table.TableThe type function indicates that the result is an Astropy Table.

Repetition

Why is table repeated three times? The first is the name

of the module, the second is the name of the submodule, and the third is

the name of the class. Most of the time we only care about the last one.

It’s like the Linnean name for the Western lowland gorilla, which is

Gorilla gorilla gorilla.

An Astropy Table is similar to a table in an SQL

database except:

SQL databases are stored on disk drives, so they are persistent; that is, they “survive” even if you turn off the computer. An Astropy

Tableis stored in memory; it disappears when you turn off the computer (or shut down your Jupyter notebook).SQL databases are designed to process queries. An Astropy

Tablecan perform some query-like operations, like selecting columns and rows. But these operations use Python syntax, not SQL.

Jupyter knows how to display the contents of a

Table.

OUTPUT

<Table length=10>

source_id ra dec parallax

deg deg mas

int64 float64 float64 float64

------------------- ------------------ ------------------- --------------------

5887983246081387776 227.978818386372 -53.64996962450103 1.0493172163332998

5887971250213117952 228.32280834041364 -53.66270726203726 0.29455652682279093

5887991866047288704 228.1582047014091 -53.454724911639794 -0.5789179941669236

5887968673232040832 228.07420888099884 -53.8064612895961 0.41030970779603076

5887979844465854720 228.42547805195946 -53.48882284470035 -0.23379683441525864

5887978607515442688 228.23831627636855 -53.56328249482688 -0.9252161956789068

[Output truncated]Each column has a name, units, and a data type.

For example, the units of ra and dec are

degrees, and their data type is float64, which is a 64-bit

floating-point

number, used to store measurements with a fraction part.

This information comes from the Gaia database, and has been stored in

the Astropy Table by Astroquery.

Exercise (3 minutes)

Read the documentation of this table and choose a column that looks interesting to you. Add the column name to the query and run it again. What are the units of the column you selected? What is its data type?

For example, we can add radial_velocity : Radial velocity (double, Velocity[km/s] ) - Spectroscopic radial velocity in the solar barycentric reference frame. The radial velocity provided is the median value of the radial velocity measurements at all epochs.

PYTHON

query1_with_rv = """SELECT

TOP 10

source_id, ra, dec, parallax, radial_velocity

FROM gaiadr2.gaia_source

"""

job1_with_rv = Gaia.launch_job(query1_with_rv)

results1_with_rv = job1_with_rv.get_results()

results1_with_rvOUTPUT

source_id ra ... parallax radial_velocity

deg ... mas km / s

------------------- ------------------ ... -------------------- ---------------

5800603716991968256 225.13905251174302 ... 0.5419737483675161 --

5800592790577127552 224.30113911598448 ... -0.6369101209622813 --

5800601273129497856 225.03260084885449 ... 0.27554460953986526 --

[Output truncated]Asynchronous queries

launch_job asks the server to run the job

“synchronously”, which normally means it runs immediately. But

synchronous jobs are limited to 2000 rows. For queries that return more

rows, you should run “asynchronously”, which mean they might take longer

to get started.

If you are not sure how many rows a query will return, you can use

the SQL command COUNT to find out how many rows are in the

result without actually returning them. We will see an example in the

next lesson.

The results of an asynchronous query are stored in a file on the server, so you can start a query and come back later to get the results. For anonymous users, files are kept for three days.

As an example, let us try a query that is similar to

query1, with these changes:

It selects the first 3000 rows, so it is bigger than we should run synchronously.

It selects two additional columns,

pmraandpmdec, which are proper motions along the axes ofraanddec.It uses a new keyword,

WHERE.

PYTHON

query2 = """SELECT

TOP 3000

source_id, ra, dec, pmra, pmdec, parallax

FROM gaiadr2.gaia_source

WHERE parallax < 1

"""A WHERE clause indicates which rows we want; in this

case, the query selects only rows “where” parallax is less

than 1. This has the effect of selecting stars with relatively low

parallax, which are farther away. We’ll use this clause to exclude

nearby stars that are unlikely to be part of GD-1.

WHERE is one of the most common clauses in ADQL/SQL, and

one of the most useful, because it allows us to download only the rows

we need from the database.

We use launch_job_async to submit an asynchronous

query.

OUTPUT

INFO: Query finished. [astroquery.utils.tap.core]

<astroquery.utils.tap.model.job.Job at 0x7f50edd40f40>And here are the results.

OUTPUT

<Table length=3000>

source_id ra ... parallax radial_velocity

deg ... mas km / s

int64 float64 ... float64 float64

------------------- ------------------ ... -------------------- ---------------

5895270396817359872 213.08433715252883 ... 2.041947005434917 --

5895272561481374080 213.2606587905109 ... 0.15693467895110133 --

5895247410183786368 213.38479712976664 ... -0.19017525742552605 --

5895249226912448000 213.41587389088238 ... -- --

5895261875598904576 213.5508930114549 ... -0.29471722363529257 --

5895258302187834624 213.87631129557286 ... 0.6468437015289753 --

[Output truncated]You might notice that some values of parallax are

negative. As this

FAQ explains, “Negative parallaxes are caused by errors in the

observations.” They have “no physical meaning,” but they can be a

“useful diagnostic on the quality of the astrometric solution.”

Different results

Your results for this query may differ from the Instructor’s. This is

because TOP 3000 returns 3000 results, but those results

are not organized in any particular way.

Exercise (5 minutes)

The clauses in a query have to be in the right order. Go back and

change the order of the clauses in query2 and run it again.

The modified query should fail, but notice that you don’t get much

useful debugging information.

For this reason, developing and debugging ADQL queries can be really hard. A few suggestions that might help:

Whenever possible, start with a working query, either an example you find online or a query you have used in the past.

Make small changes and test each change before you continue.

While you are debugging, use

TOPto limit the number of rows in the result. That will make each test run faster, which reduces your development time.Launching test queries synchronously might make them start faster, too.

In this example, the WHERE clause is in the wrong place.

ERROR

query2_erroneous = """SELECT

TOP 3000

source_id, ra, dec, pmra, pmdec, parallax

FROM gaiadr2.gaia_source

WHERE parallax < 1

"""Operators

In a WHERE clause, you can use any of the SQL comparison

operators; here are the most common ones:

| Symbol | Operation |

|---|---|

> |

greater than |

< |

less than |

>= |

greater than or equal |

<= |

less than or equal |

= |

equal |

!= or <>

|

not equal |

Most of these are the same as Python, but some are not. In

particular, notice that the equality operator is =, not

==. Be careful to keep your Python out of your ADQL!

You can combine comparisons using the logical operators:

- AND: true if both comparisons are true

- OR: true if either or both comparisons are true

Finally, you can use NOT to invert the result of a

comparison.

Exercise (5 minutes)

Read about

SQL operators here and then modify the previous query to select rows

where bp_rp is between -0.75 and

2.

bp_rp contains BP-RP color, which is the difference

between two other columns, phot_bp_mean_mag and

phot_rp_mean_mag. You can read

about this variable here.

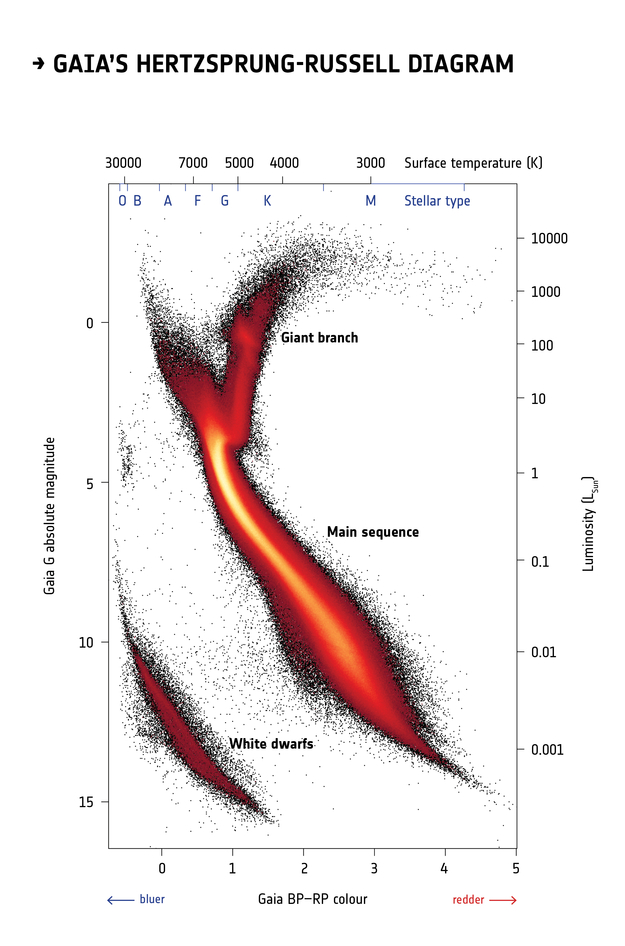

This Hertzsprung-Russell diagram shows the BP-RP color and luminosity of stars in the Gaia catalog (Copyright: ESA/Gaia/DPAC, CC BY-SA 3.0 IGO).

Selecting stars with bp-rp less than 2 excludes many class M dwarf stars, which are low

temperature, low luminosity. A star like that at GD-1’s distance would

be hard to detect, so if it is detected, it is more likely to be in the

foreground.

Formatting queries

The queries we have written so far are string “literals”, meaning that the entire string is part of the program. But writing queries yourself can be slow, repetitive, and error-prone.

It is often better to write Python code that assembles a query for

you. One useful tool for that is the string

format method.

As an example, we will divide the previous query into two parts; a list of column names and a “base” for the query that contains everything except the column names.

Here is the list of columns we will select.

And here is the base. It is a string that contains at least one format specifier in curly brackets (braces).

PYTHON

query3_base = """SELECT

TOP 10

{columns}

FROM gaiadr2.gaia_source

WHERE parallax < 1

AND bp_rp BETWEEN -0.75 AND 2

"""This base query contains one format specifier,

{columns}, which is a placeholder for the list of column

names we will provide.

To assemble the query, we invoke format on the base

string and provide a keyword argument that assigns a value to

columns.

In this example, the variable that contains the column names and the variable in the format specifier have the same name. That is not required, but it is a common style.

The result is a string with line breaks. If you display it, the line

breaks appear as \n.

OUTPUT

'SELECT \nTOP 10 \nsource_id, ra, dec, pmra, pmdec, parallax\nFROM gaiadr2.gaia_source\nWHERE parallax < 1\n AND bp_rp BETWEEN -0.75 AND 2\n'But if you print it, the line breaks appear as line breaks.

OUTPUT

SELECT

TOP 10

source_id, ra, dec, pmra, pmdec, parallax

FROM gaiadr2.gaia_source

WHERE parallax < 1

AND bp_rp BETWEEN -0.75 AND 2Notice that the format specifier has been replaced with the value of

columns.

Let’s run it and see if it works:

OUTPUT

<Table length=10>

name dtype unit description

--------- ------- -------- ------------------------------------------------------------------

source_id int64 Unique source identifier (unique within a particular Data Release)

ra float64 deg Right ascension

dec float64 deg Declination

pmra float64 mas / yr Proper motion in right ascension direction

pmdec float64 mas / yr Proper motion in declination direction

parallax float64 mas Parallax

Jobid: None

Phase: COMPLETED

Owner: None

[Output truncated]OUTPUT

<Table length=10>

source_id ra ... parallax

deg ... mas

------------------- ------------------ ... -------------------

3031147124474711552 110.10540720349103 ... 0.47255775887968876

3031114276567648256 110.92831846731636 ... 0.41817219481822415

3031130872315906048 110.61072654450903 ... 0.178490206751036

3031128162192428544 110.78664993513391 ... 0.8412331482786942

3031140497346996736 110.0617759777779 ... 0.16993569795437397

3031111910043832576 110.84459425332385 ... 0.4668864606089576

[Output truncated]Exercise (10 minutes)

This query always selects sources with parallax less

than 1. But suppose you want to take that upper bound as an input.

Modify query3_base to replace 1 with a

format specifier like {max_parallax}. Now, when you call

format, add a keyword argument that assigns a value to

max_parallax, and confirm that the format specifier gets

replaced with the value you provide.

Summary

This episode has demonstrated the following steps:

Making a connection to the Gaia server,

Exploring information about the database and the tables it contains,

Writing a query and sending it to the server, and finally

Downloading the response from the server as an Astropy

Table.

In the next episode we will extend these queries to select a particular region of the sky.

- If you can’t download an entire dataset (or it is not practical) use queries to select the data you need.

- Read the metadata and the documentation to make sure you understand the tables, their columns, and what they mean.

- Develop queries incrementally: start with something simple, test it, and add a little bit at a time.

- Use ADQL features like

TOPandCOUNTto test before you run a query that might return a lot of data. - If you know your query will return fewer than 3000 rows, you can run it synchronously. If it might return more than 3000 rows, you should run it asynchronously.

- ADQL and SQL are not case-sensitive. You don’t have to capitalize the keywords, but it will make your code more readable.

- ADQL and SQL don’t require you to break a query into multiple lines, but it will make your code more readable.

- Make each section of the notebook self-contained. Try not to use the same variable name in more than one section.

- Keep notebooks short. Look for places where you can break your analysis into phases with one notebook per phase.

Content from Coordinate Transformations

Last updated on 2025-12-15 | Edit this page

Overview

Questions

- How do we transform celestial coordinates from one frame to another and save a subset of the results in files?

Objectives

- Use Python string formatting to compose more complex ADQL queries.

- Work with coordinates and other quantities that have units.

- Download the results of a query and store them in a file.

In the previous episode, we wrote ADQL queries and used them to select and download data from the Gaia server. In this episode, we will write a query to select stars from a particular region of the sky.

Outline

We’ll start with an example that does a “cone search”; that is, it selects stars that appear in a circular region of the sky.

Then, to select stars in the vicinity of GD-1, we will:

Use

Quantityobjects to represent measurements with units.Use Astropy to convert coordinates from one frame to another.

Use the ADQL keywords

POLYGON,CONTAINS, andPOINTto select stars that fall within a polygonal region.Submit a query and download the results.

Store the results in a FITS file.

Working with Units

The measurements we will work with are physical quantities, which means that they have two parts, a value and a unit. For example, the coordinate 30° has value 30 and its units are degrees.

Until recently, most scientific computation was done with values only; units were left out of the program altogether, sometimes with catastrophic results.

Astropy provides tools for including units explicitly in computations, which makes it possible to detect errors before they cause disasters.

To use Astropy units, we import them like this:

u is an object that contains most common units and all

SI units.

You can use dir to list them, but you should also read the

documentation.

OUTPUT

['A',

'AA',

'AB',

'ABflux',

'ABmag',

'AU',

'Angstrom',

'B',

'Ba',

'Barye',

'Bi',

[Output truncated]To create a quantity, we multiply a value by a unit:

OUTPUT

astropy.units.quantity.QuantityThe result is a Quantity object. Jupyter knows how to

display Quantities like this:

OUTPUT

<Quantity 10. deg>10°

Quantities provides a method called to that

converts to other units. For example, we can compute the number of

arcminutes in angle:

OUTPUT

<Quantity 600. arcmin>600′

If you add quantities, Astropy converts them to compatible units, if possible:

OUTPUT

<Quantity 10.5 deg>10.5°

If the units are not compatible, you get an error. For example:

ERROR

angle + 5 * u.kgcauses a UnitConversionError.

Exercise (5 minutes)

Create a quantity that represents 5 arcminutes

and assign it to a variable called radius.

Then convert it to degrees.

Selecting a Region

One of the most common ways to restrict a query is to select stars in a particular region of the sky. For example, here is a query from the Gaia archive documentation that selects objects in a circular region centered at (88.8, 7.4) with a search radius of 5 arcmin (0.08333 deg).

PYTHON

cone_query = """SELECT

TOP 10

source_id

FROM gaiadr2.gaia_source

WHERE 1=CONTAINS(

POINT(ra, dec),

CIRCLE(88.8, 7.4, 0.08333333))

"""This query uses three keywords that are specific to ADQL (not SQL):

POINT: a location in ICRS coordinates, specified in degrees of right ascension and declination.CIRCLE: a circle where the first two values are the coordinates of the center and the third is the radius in degrees.CONTAINS: a function that returns1if aPOINTis contained in a shape and0otherwise. Here is the documentation ofCONTAINS.

A query like this is called a cone search because it selects stars in a cone. Here is how we run it:

OUTPUT

Created TAP+ (v1.2.1) - Connection:

Host: gea.esac.esa.int

Use HTTPS: True

Port: 443

SSL Port: 443

Created TAP+ (v1.2.1) - Connection:

Host: geadata.esac.esa.int

Use HTTPS: True

Port: 443

SSL Port: 443

<astroquery.utils.tap.model.job.Job at 0x7f277785fa30>OUTPUT

<Table length=10>

source_id

int64

-------------------

3322773965056065536

3322773758899157120

3322774068134271104

3322773930696320512

3322774377374425728

3322773724537891456

3322773724537891328

[Output truncated]Exercise (5 minutes)

When you are debugging queries like this, you can use

TOP to limit the size of the results, but then you still

don’t know how big the results will be.

An alternative is to use COUNT, which asks for the

number of rows that would be selected, but it does not return them.

In the previous query, replace TOP 10 source_id with

COUNT(source_id) and run the query again. How many stars

has Gaia identified in the cone we searched?

PYTHON

count_cone_query = """SELECT

COUNT(source_id)

FROM gaiadr2.gaia_source

WHERE 1=CONTAINS(

POINT(ra, dec),

CIRCLE(88.8, 7.4, 0.08333333))

"""

count_cone_job = Gaia.launch_job(count_cone_query)

count_cone_results = count_cone_job.get_results()

count_cone_resultsOUTPUT

<Table length=1>

count

int64

-----

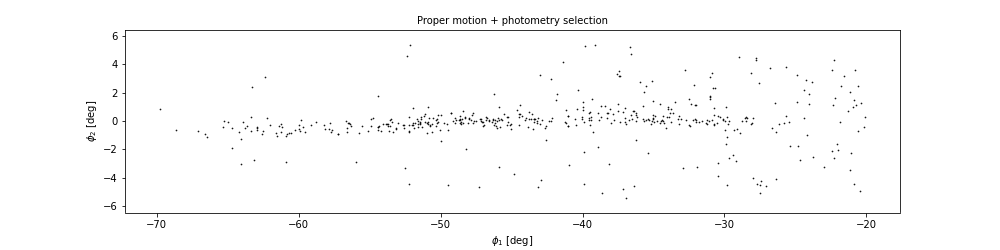

594Getting GD-1 Data

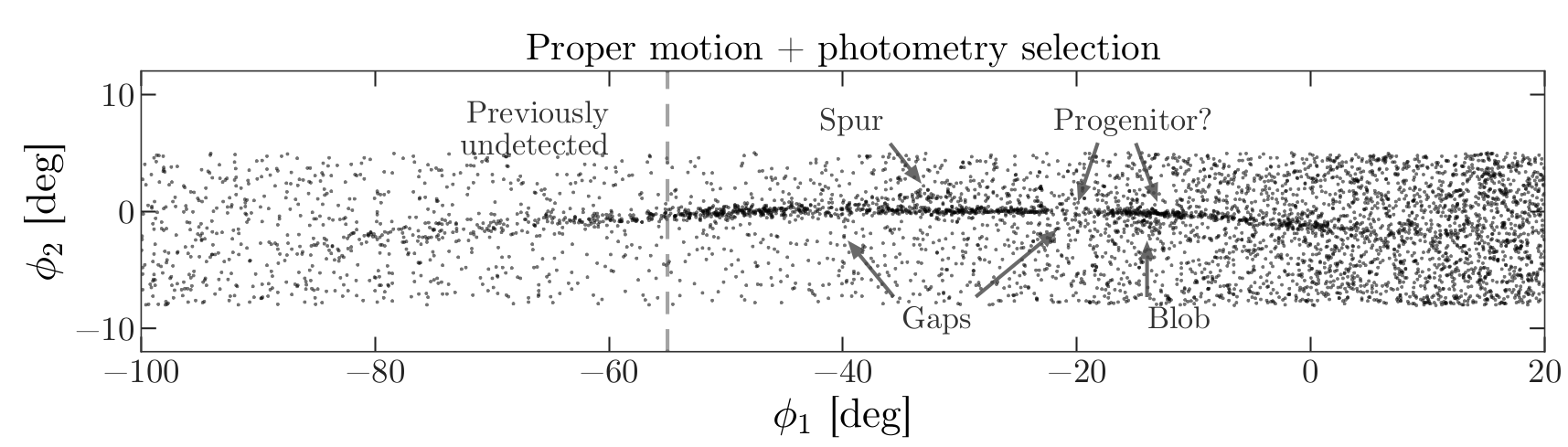

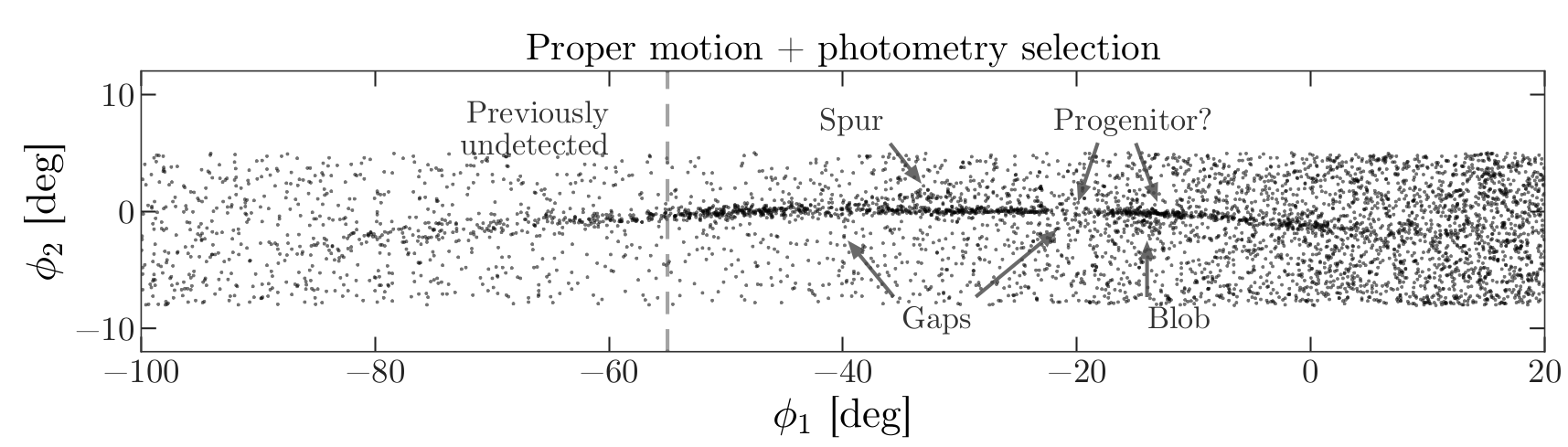

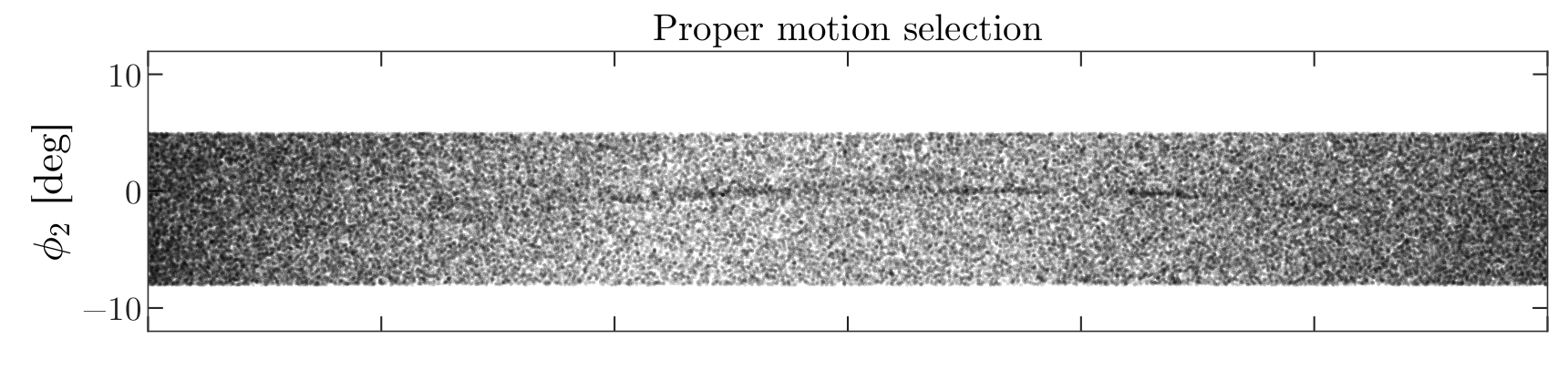

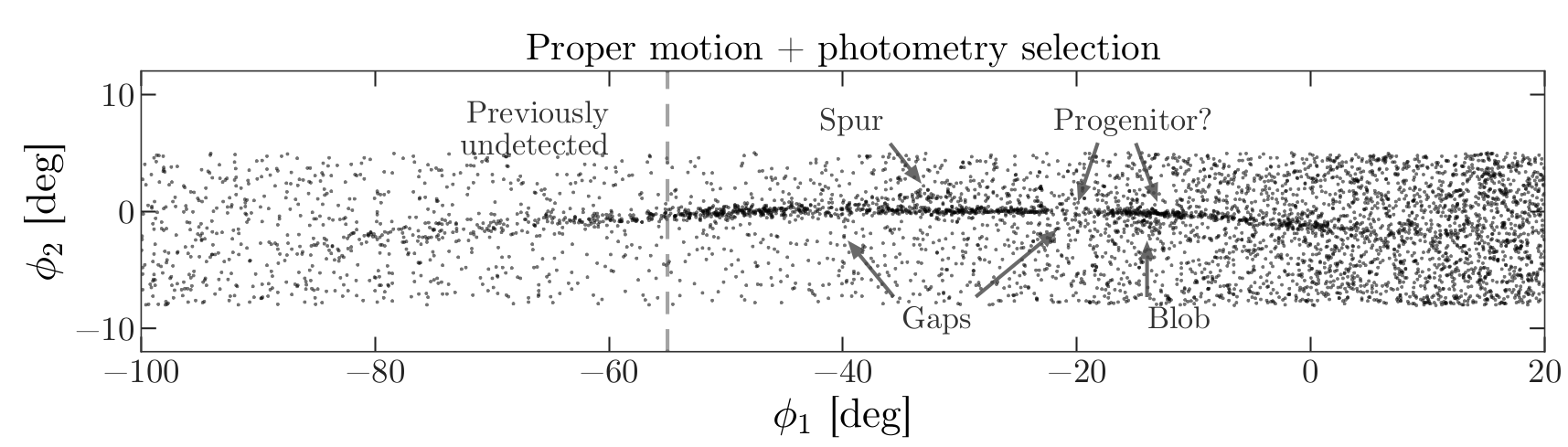

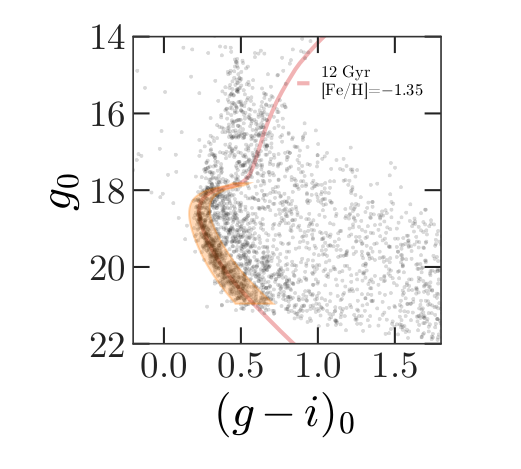

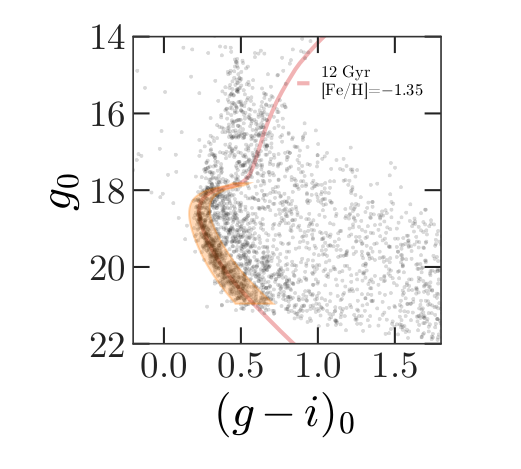

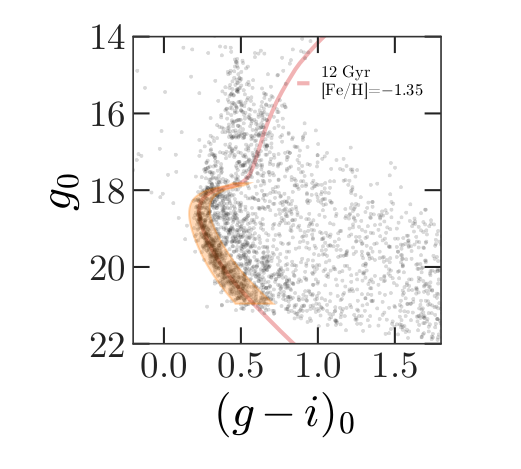

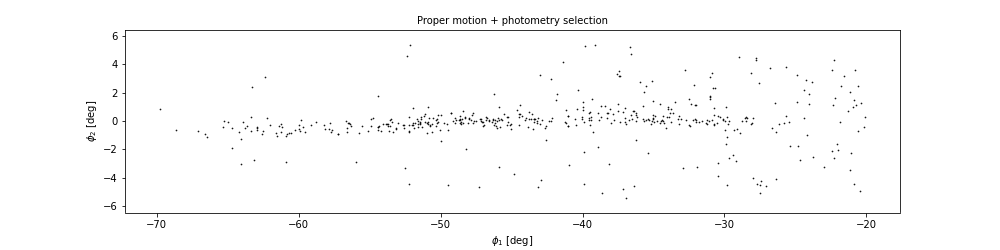

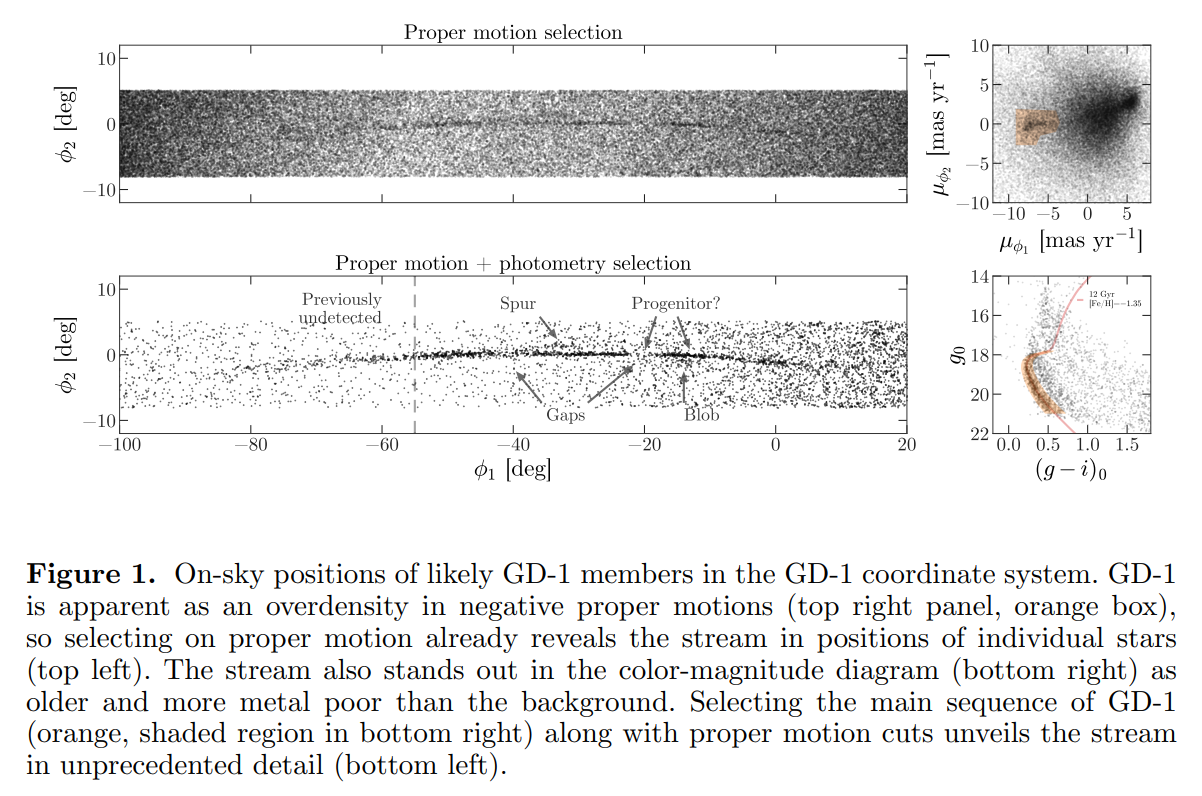

From the Price-Whelan and Bonaca paper, we will try to reproduce Figure 1, which includes this representation of stars likely to belong to GD-1:

The axes of this figure are defined so the x-axis is aligned with the stars in GD-1, and the y-axis is perpendicular.

Along the x-axis (\(\phi_1\)) the figure extends from -100 to 20 degrees.

Along the y-axis (\(\phi_2\)) the figure extends from about -8 to 4 degrees.

Ideally, we would select all stars from this rectangle, but there are more than 10 million of them. This would be difficult to work with, and as anonymous Gaia users, we are limited to 3 million rows in a single query. While we are developing and testing code, it will be faster to work with a smaller dataset.

So we will start by selecting stars in a smaller rectangle near the center of GD-1, from -55 to -45 degrees \(\phi_1\) and -8 to 4 degrees \(\phi_2\). First we will learn how to represent these coordinates with Astropy.

Transforming coordinates

Astronomy makes use of many different coordinate systems. Transforming between coordinate systems is a common task in observational astronomy, and thankfully, Astropy has abstracted the required spherical trigonometry for us. Below we show the steps to go from Equatorial coordinates (sky coordinates) to Galactic coordinates and finally to a reference frame defined to more easily study GD-1.

Astropy provides a SkyCoord object that represents sky

coordinates relative to a specified reference frame.

The following example creates a SkyCoord object that

represents the approximate coordinates of Betelgeuse

(alf Ori) in the ICRS frame.

ICRS is the “International Celestial Reference System”, adopted in 1997 by the International Astronomical Union.

PYTHON

from astropy.coordinates import SkyCoord

ra = 88.8 * u.degree

dec = 7.4 * u.degree

coord_icrs = SkyCoord(ra=ra, dec=dec, frame='icrs')

coord_icrsOUTPUT

<SkyCoord (ICRS): (ra, dec) in deg

(88.8, 7.4)>SkyCoord objects require units in order to understand

the context. There are a number of ways to define SkyCoord

objects, in our example, we explicitly specified the coordinates and

units and provided a reference frame.

SkyCoord provides the transform_to function

to transform from one reference frame to another reference frame. For

example, we can transform coords_icrs to Galactic

coordinates like this:

OUTPUT

<SkyCoord (Galactic): (l, b) in deg

(199.79693102, -8.95591653)>Coordinate Variables

Notice that in the Galactic frame, the coordinates are the variables

we usually use for Galactic longitude and latitude called l

and b, respectively, not ra and

dec. Most reference frames have different ways to specify

coordinates and the SkyCoord object will use these

names.

To transform to and from GD-1 coordinates, we will use a frame defined by Gala, which is an Astropy-affiliated library that provides tools for galactic dynamics.

Gala provides GD1Koposov10,

which is “a Heliocentric spherical coordinate system defined by the

orbit of the GD-1 stream”. In this coordinate system, one axis is

defined along the direction of the stream (the x-axis in Figure 1) and

one axis is defined perpendicular to the direction of the stream (the

y-axis in Figure 1). These are called the \(\phi_1\) and \(\phi_2\) coordinates, respectively.

OUTPUT

<GD1Koposov10 Frame>GD1Koposov10 import

The GD1Koposov10 reference frame is part of the

gala package. For convience we provide the stand alone file

as part of the student_download zip file you downloaded. If

your notebook is not in your student_download directory,

you will need to add the following before the import statement

Where <path to student_download> is the path to

your student_download directory ending with

student_download.

We can use it to find the coordinates of Betelgeuse in the GD-1 frame, like this:

OUTPUT

<SkyCoord (GD1Koposov10): (phi1, phi2) in deg

(-94.97222038, 34.5813813)>Exercise (10 minutes)

Find the location of GD-1 in ICRS coordinates.

Create a

SkyCoordobject at 0°, 0° in the GD-1 frame.Transform it to the ICRS frame.

Hint: Because ICRS is a standard frame, it is built into Astropy. You

can specify it by name, icrs (as we did with

galactic).

Notice that the origin of the GD-1 frame maps to ra=200,

exactly, in ICRS. That is by design.

Selecting a rectangle

Now that we know how to define coordinate transformations, we are going to use them to get a list of stars that are in GD-1. As we mentioned before, this is a lot of stars, so we are going to start by defining a rectangle that encompasses a small part of GD-1. This is easiest to define in GD-1 coordinates.

The following variables define the boundaries of the rectangle in \(\phi_1\) and \(\phi_2\).

PYTHON

phi1_min = -55 * u.degree

phi1_max = -45 * u.degree

phi2_min = -8 * u.degree

phi2_max = 4 * u.degreeThroughout this lesson we are going to be defining a rectangle often. Rather than copy and paste multiple lines of code, we will write a function to build the rectangle for us. By having the code contained in a single location, we can easily fix bugs or update our implementation as needed. By choosing an explicit function name our code is also self documenting, meaning it’s easy for us to understand that we are building a rectangle when we call this function.

To create a rectangle, we will use the following function, which takes the lower and upper bounds as parameters and returns a list of x and y coordinates of the corners of a rectangle starting with the lower left corner and working clockwise.

PYTHON

def make_rectangle(x1, x2, y1, y2):

"""Return the corners of a rectangle."""

xs = [x1, x1, x2, x2, x1]

ys = [y1, y2, y2, y1, y1]

return xs, ysThe return value is a tuple containing a list of coordinates in \(\phi_1\) followed by a list of coordinates in \(\phi_2\).

phi1_rect and phi2_rect contain the

coordinates of the corners of a rectangle in the GD-1 frame.

While it is easier to visualize the regions we want to define in the GD-1 frame, the coordinates in the Gaia catalog are in the ICRS frame. In order to use the rectangle we defined, we need to convert the coordinates from the GD-1 frame to the ICRS frame. We will do this using the SkyCoord object. Fortunately SkyCoord objects can take lists of coordinates, in addition to single values.

OUTPUT

<SkyCoord (GD1Koposov10): (phi1, phi2) in deg

[(-55., -8.), (-55., 4.), (-45., 4.), (-45., -8.), (-55., -8.)]>Now we can use transform_to to convert to ICRS

coordinates.

OUTPUT

<SkyCoord (ICRS): (ra, dec) in deg

[(146.27533314, 19.26190982), (135.42163944, 25.87738723),

(141.60264825, 34.3048303 ), (152.81671045, 27.13611254),

(146.27533314, 19.26190982)]>Notice that a rectangle in one coordinate system is not necessarily a rectangle in another. In this example, the result is a (non-rectangular) polygon. This is why we defined our rectangle in the GD-1 frame.

Defining a polygon

In order to use this polygon as part of an ADQL query, we have to convert it to a string with a comma-separated list of coordinates, as in this example:

SkyCoord provides to_string, which produces

a list of strings.

OUTPUT

['146.275 19.2619',

'135.422 25.8774',

'141.603 34.3048',

'152.817 27.1361',

'146.275 19.2619']We can use the Python string function join to join

corners_list_str into a single string (with spaces between

the pairs):

OUTPUT

'146.275 19.2619 135.422 25.8774 141.603 34.3048 152.817 27.1361 146.275 19.2619'This is almost what we need, but we have to replace the spaces with commas.

OUTPUT

'146.275, 19.2619, 135.422, 25.8774, 141.603, 34.3048, 152.817, 27.1361, 146.275, 19.2619'This is something we will need to do multiple times. We will write a function to do it for us so we don’t have to copy and paste every time. The following function combines these steps.

PYTHON

def skycoord_to_string(skycoord):

"""Convert a one-dimensional list of SkyCoord to string for Gaia's query format."""

corners_list_str = skycoord.to_string()

corners_single_str = ' '.join(corners_list_str)

return corners_single_str.replace(' ', ', ')Here is how we use this function:

OUTPUT

'146.275, 19.2619, 135.422, 25.8774, 141.603, 34.3048, 152.817, 27.1361, 146.275, 19.2619'Assembling the query

Now we are ready to assemble our query to get all of the stars in the Gaia catalog that are in the small rectangle we defined and are likely to be part of GD-1 with the criteria we previously defined.

We need columns again (as we saw in the previous

episode).

And here is the query base we used in the previous lesson:

PYTHON

query3_base = """SELECT

TOP 10

{columns}

FROM gaiadr2.gaia_source

WHERE parallax < 1

AND bp_rp BETWEEN -0.75 AND 2

"""Now we will add a WHERE clause to select stars in the

polygon we defined and start using more descriptive variables for our

queries.

PYTHON

polygon_top10query_base = """SELECT

TOP 10

{columns}

FROM gaiadr2.gaia_source

WHERE parallax < 1

AND bp_rp BETWEEN -0.75 AND 2

AND 1 = CONTAINS(POINT(ra, dec),

POLYGON({sky_point_list}))

"""The query base contains format specifiers for columns

and sky_point_list.

We will use format to fill in these values.

PYTHON

polygon_top10query = polygon_top10query_base.format(columns=columns,

sky_point_list=sky_point_list)

print(polygon_top10query)OUTPUT

SELECT

TOP 10

source_id, ra, dec, pmra, pmdec, parallax

FROM gaiadr2.gaia_source

WHERE parallax < 1

AND bp_rp BETWEEN -0.75 AND 2

AND 1 = CONTAINS(POINT(ra, dec),

POLYGON(146.275, 19.2619, 135.422, 25.8774, 141.603, 34.3048, 152.817, 27.1361, 146.275, 19.2619))

As always, we should take a minute to proof-read the query before we launch it.

PYTHON

polygon_top10query_job = Gaia.launch_job_async(polygon_top10query)

print(polygon_top10query_job)OUTPUT

INFO: Query finished. [astroquery.utils.tap.core]

<Table length=10>

name dtype unit description

--------- ------- -------- ------------------------------------------------------------------

source_id int64 Unique source identifier (unique within a particular Data Release)

ra float64 deg Right ascension

dec float64 deg Declination

pmra float64 mas / yr Proper motion in right ascension direction

pmdec float64 mas / yr Proper motion in declination direction

parallax float64 mas Parallax

Jobid: 1615815873808O

Phase: COMPLETED

[Output truncated]Here are the results.

OUTPUT

<Table length=10>

source_id ra ... parallax

deg ... mas

------------------ ------------------ ... --------------------

637987125186749568 142.48301935991023 ... -0.2573448962333354

638285195917112960 142.25452941346344 ... 0.4227283465319491

638073505568978688 142.64528557468074 ... 0.10363972229362585

638086386175786752 142.57739430926034 ... -0.8573270355079308

638049655615392384 142.58913564478618 ... 0.099624729200593

638267565075964032 141.81762228999614 ... -0.07271215219283075

[Output truncated]Finally, we can remove TOP 10 and run the query

again.

The result is bigger than our previous queries, so it will take a little longer.

PYTHON

polygon_query_base = """SELECT

{columns}

FROM gaiadr2.gaia_source

WHERE parallax < 1

AND bp_rp BETWEEN -0.75 AND 2

AND 1 = CONTAINS(POINT(ra, dec),

POLYGON({sky_point_list}))

"""PYTHON

polygon_query = polygon_query_base.format(columns=columns,

sky_point_list=sky_point_list)

print(polygon_query)OUTPUT

SELECT

source_id, ra, dec, pmra, pmdec, parallax

FROM gaiadr2.gaia_source

WHERE parallax < 1

AND bp_rp BETWEEN -0.75 AND 2

AND 1 = CONTAINS(POINT(ra, dec),

POLYGON(146.275, 19.2619, 135.422, 25.8774, 141.603, 34.3048, 152.817, 27.1361, 146.275, 19.2619))OUTPUT

INFO: Query finished. [astroquery.utils.tap.core]

<Table length=140339>

name dtype unit description

--------- ------- -------- ------------------------------------------------------------------

source_id int64 Unique source identifier (unique within a particular Data Release)

ra float64 deg Right ascension

dec float64 deg Declination

pmra float64 mas / yr Proper motion in right ascension direction

pmdec float64 mas / yr Proper motion in declination direction

parallax float64 mas Parallax

Jobid: 1615815886707O

Phase: COMPLETED

[Output truncated]OUTPUT

140339There are more than 100,000 stars in this polygon, but that’s a manageable size to work with.

Saving results

This is the set of stars we will work with in the next step. Since we have a substantial dataset now, this is a good time to save it.

Storing the data in a file means we can shut down our notebook and pick up where we left off without running the previous query again.

Astropy Table objects provide write, which

writes the table to disk.

Because the filename ends with fits, the table is

written in the FITS

format, which preserves the metadata associated with the table.

If the file already exists, the overwrite argument

causes it to be overwritten.

We can use getsize to confirm that the file exists and

check the size:

OUTPUT

6.4324951171875Summary

In this notebook, we composed more complex queries to select stars within a polygonal region of the sky. Then we downloaded the results and saved them in a FITS file.

In the next notebook, we’ll reload the data from this file and replicate the next step in the analysis, using proper motion to identify stars likely to be in GD-1.

- For measurements with units, use

Quantityobjects that represent units explicitly and check for errors. - Use the

formatfunction to compose queries; it is often faster and less error-prone. - Develop queries incrementally: start with something simple, test it, and add a little bit at a time.

- Once you have a query working, save the data in a local file. If you shut down the notebook and come back to it later, you can reload the file; you don’t have to run the query again.

Content from Plotting and Tabular Data

Last updated on 2025-12-15 | Edit this page

Overview

Questions

- How do we make scatter plots in Matplotlib?

- How do we store data in a Pandas

DataFrame?

Objectives

- Select rows and columns from an Astropy

Table. - Use Matplotlib to make a scatter plot.

- Use Gala to transform coordinates.

- Make a Pandas

DataFrameand use a BooleanSeriesto select rows. - Save a

DataFramein an HDF5 file.

In the previous episode, we wrote a query to select stars from the region of the sky where we expect GD-1 to be, and saved the results in a FITS file.

Now we will read that data back in and implement the next step in the analysis, identifying stars with the proper motion we expect for GD-1.

Outline

We will read back the results from the previous lesson, which we saved in a FITS file.

Then we will transform the coordinates and proper motion data from ICRS back to the coordinate frame of GD-1.

We will put those results into a Pandas

DataFrame.

Starting from this episode

If you are starting a new notebook for this episode, expand this section for information you will need to get started.

In the previous episode, we ran a query on the Gaia server, downloaded data for roughly 140,000 stars, and saved the data in a FITS file. We will use that data for this episode. Whether you are working from a new notebook or coming back from a checkpoint, reloading the data will save you from having to run the query again.

If you are starting this episode here or starting this episode in a

new notebook, you will need to run the following lines of code. The last

two lines of the import statements assume your notebook is being run in

the student_download directory. If you are running it from

a different directory you will need to add the path using

sys.path.append(<path to student download>) where

<path to student_download> is the path to your

student_download directory ending with

student_download.

This imports previously imported functions:

PYTHON

import astropy.units as u

from astropy.coordinates import SkyCoord

from astropy.table import Table

from episode_functions import *

from gd1 import GD1Koposov10The following code loads in the data (instructions for downloading

data can be found in the setup instructions).

You may need to add a the path to the filename variable below

(e.g. filename = 'student_download/backup-data/gd1_results.fits')

Selecting rows and columns

In the previous episode, we selected spatial and proper motion

information from the Gaia catalog for stars around a small part of GD-1.

The output was returned as an Astropy Table. We can use

info to check the contents.

OUTPUT

<Table length=140339>

name dtype unit description

--------- ------- -------- ------------------------------------------------------------------

source_id int64 Unique source identifier (unique within a particular Data Release)

ra float64 deg Right ascension

dec float64 deg Declination

pmra float64 mas / yr Proper motion in right ascension direction

pmdec float64 mas / yr Proper motion in declination direction

parallax float64 mas ParallaxIn this episode, we will see operations for selecting columns and

rows from an Astropy Table. You can find more information

about these operations in the Astropy

documentation.

We can get the names of the columns like this:

OUTPUT

['source_id', 'ra', 'dec', 'pmra', 'pmdec', 'parallax']And select an individual column like this:

OUTPUT

<Column name='ra' dtype='float64' unit='deg' description='Right ascension' length=140339>

142.48301935991023

142.25452941346344

142.64528557468074

142.57739430926034

142.58913564478618

141.81762228999614

143.18339801317677

142.9347319464589

142.26769745823267

142.89551292869012

[Output truncated]The result is a Column object that contains the data,

and also the data type, units, and name of the column.

OUTPUT

astropy.table.column.ColumnThe rows in the Table are numbered from 0 to

n-1, where n is the number of rows. We can

select the first row like this:

OUTPUT

<Row index=0>

source_id ra dec pmra pmdec parallax

deg deg mas / yr mas / yr mas

int64 float64 float64 float64 float64 float64

------------------ ------------------ ----------------- ------------------- ----------------- -------------------

637987125186749568 142.48301935991023 21.75771616932985 -2.5168384683875766 2.941813096629439 -0.2573448962333354The result is a Row object.

OUTPUT

astropy.table.row.RowNotice that the bracket operator can be used to select both columns and rows. You might wonder how it knows which to select. If the expression in brackets is a string, it selects a column; if the expression is an integer, it selects a row.

If you apply the bracket operator twice, you can select a column and then an element from the column.

OUTPUT

np.float64(142.48301935991023)Or you can select a row and then an element from the row.

OUTPUT

np.float64(142.48301935991023)You get the same result either way.

Scatter plot

To see what the results look like, we will use a scatter plot. The library we will use is Matplotlib, which is the most widely-used plotting library for Python. The Matplotlib interface is based on MATLAB (hence the name), so if you know MATLAB, some of it will be familiar.

We will import like this:

Pyplot is part of the Matplotlib library. It is conventional to

import it using the shortened name plt.

Keeping plots in the notebook

In recent versions of Jupyter, plots appear “inline”; that is, they are part of the notebook. In some older versions, plots appear in a new window. If your plots appear in a new window, you might want to run the following Jupyter magic command in a notebook cell:

Pyplot provides two functions that can make scatter plots, plt.scatter and plt.plot.

scatteris more versatile; for example, you can make every point in a scatter plot a different color.plotis more limited, but for simple cases, it can be substantially faster.

Jake Vanderplas explains these differences in The Python Data Science Handbook.

Since we are plotting more than 100,000 points and they are all the

same size and color, we will use plot.

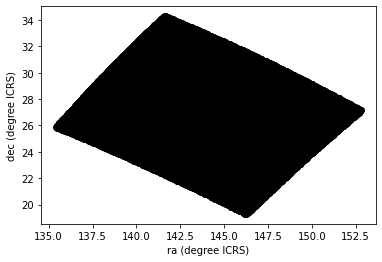

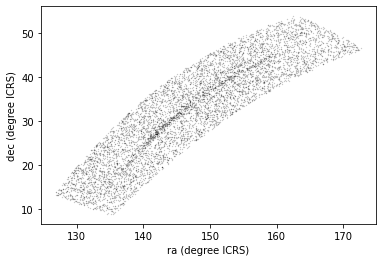

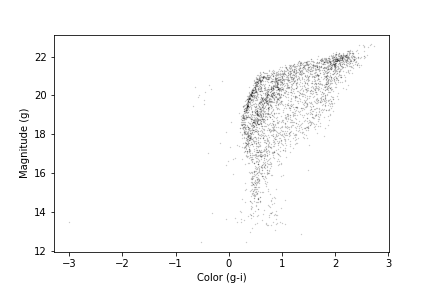

Here is a scatter plot of the stars we selected in the GD-1 region with right ascension on the x-axis and declination on the y-axis, both ICRS coordinates in degrees.

PYTHON

x = polygon_results['ra']

y = polygon_results['dec']

plt.plot(x, y, 'ko')

plt.xlabel('ra (degree ICRS)')

plt.ylabel('dec (degree ICRS)')OUTPUT

<Figure size 432x288 with 1 Axes>

The arguments to plt.plot are x,

y, and a string that specifies the style. In this case, the

letters ko indicate that we want a black, round marker

(k is for black because b is for blue). The

functions xlabel and ylabel put labels on the

axes.

Looking at this plot, we can see that the region we selected, which is a rectangle in GD-1 coordinates, is a non-rectangular region in ICRS coordinates.

However, this scatter plot has a problem. It is “overplotted”, which means that there are so many overlapping points, we cannot distinguish between high and low density areas.

To fix this, we can provide optional arguments to control the size and transparency of the points.

Exercise (5 minutes)

In the call to plt.plot, use the keyword argument

markersize to make the markers smaller.

Then add the keyword argument alpha to make the markers

partly transparent.

Adjust these arguments until you think the figure shows the data most clearly.

Note: Once you have made these changes, you might notice that the figure shows stripes with lower density of stars. These stripes are caused by the way Gaia scans the sky, which you can read about here. The dataset we are using, Gaia Data Release 2, covers 22 months of observations; during this time, some parts of the sky were scanned more than others.

Transform back

Remember that we selected data from a rectangle of coordinates in the GD-1 frame, then transformed them to ICRS when we constructed the query. The coordinates in the query results are in ICRS.

To plot them, we will transform them back to the GD-1 frame; that way, the axes of the figure are aligned with the orbit of GD-1, which is useful for two reasons:

By transforming the coordinates, we can identify stars that are likely to be in GD-1 by selecting stars near the centerline of the stream, where \(\phi_2\) is close to 0.

By transforming the proper motions, we can identify stars with non-zero proper motion along the \(\phi_1\) axis, which are likely to be part of GD-1.

To do the transformation, we will put the results into a

SkyCoord object. In a previous episode, we created a

SkyCoord object like this:

Notice that we did not specify the reference frame. That is because

when using ra and dec in

SkyCoord, the ICRS frame is assumed by

default.

The SkyCoord object can keep track not just of location,

but also proper motions. This means that we can initialize a

SkyCoord object with location and proper motions, then use

all of these quantities together to transform into the GD-1 frame.

Now we are going to do something similar, but now we will take

advantage of the SkyCoord object’s capacity to include and

track space motion information in addition to ra and

dec. We will now also include:

pmraandpmdec, which are proper motion in theICRSframe, anddistanceandradial_velocity, which are important for the reflex correction and will be discussed in that section.

PYTHON

distance = 8 * u.kpc

radial_velocity= 0 * u.km/u.s

skycoord = SkyCoord(ra=polygon_results['ra'],

dec=polygon_results['dec'],

pm_ra_cosdec=polygon_results['pmra'],

pm_dec=polygon_results['pmdec'],

distance=distance,

radial_velocity=radial_velocity)For the first four arguments, we use columns from

polygon_results.

For distance and radial_velocity we use

constants, which we explain in the section on reflex correction.

The result is an Astropy SkyCoord object, which we can

transform to the GD-1 frame.

The result is another SkyCoord object, now in the GD-1

frame.

Reflex Correction

The next step is to correct the proper motion measurements for the effect of the motion of our solar system around the Galactic center.

When we created skycoord, we provided constant values

for distance and radial_velocity rather than

measurements from Gaia.

That might seem like a strange thing to do, but here is the motivation:

Because the stars in GD-1 are so far away, parallaxes measured by Gaia are negligible, making the distance estimates unreliable.

So we replace them with our current best estimate of the mean distance to GD-1, about 8 kpc. See Koposov, Rix, and Hogg, 2010.For the other stars in the table, this distance estimate will be inaccurate, so reflex correction will not be correct. But that should have only a small effect on our ability to identify stars with the proper motion we expect for GD-1.

The measurement of radial velocity has no effect on the correction for proper motion, but we have to provide a value to avoid errors in the reflex correction calculation. So we provide

0as an arbitrary place-keeper.

With this preparation, we can use reflex_correct from

Gala (documentation

here) to correct for the motion of the solar system which we have

included as a stand alone file in the student_download.

The result is a SkyCoord object that contains

phi1andphi2, which represent the transformed coordinates in the GD-1 frame.pm_phi1_cosphi2andpm_phi2, which represent the transformed proper motions that have been corrected for the motion of the solar system around the Galactic center.

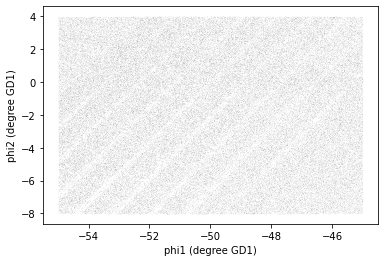

We can select the coordinates and plot them like this:

PYTHON

x = skycoord_gd1.phi1

y = skycoord_gd1.phi2

plt.plot(x, y, 'ko', markersize=0.1, alpha=0.1)

plt.xlabel('phi1 (degree GD1)')

plt.ylabel('phi2 (degree GD1)')OUTPUT

<Figure size 432x288 with 1 Axes>

We started with a rectangle in the GD-1 frame. When transformed to the ICRS frame, it is a non-rectangular region. Now, transformed back to the GD-1 frame, it is a rectangle again.

Pandas DataFrame

At this point we have two objects containing different sets of the

data relating to identifying stars in GD-1. polygon_results

is the Astropy Table we downloaded from Gaia.

OUTPUT

astropy.table.table.TableAnd skycoord_gd1 is a SkyCoord object that

contains the transformed coordinates and proper motions.

OUTPUT

astropy.coordinates.sky_coordinate.SkyCoordOn one hand, this division of labor makes sense because each object provides different capabilities. But working with multiple object types can be awkward. It will be more convenient to choose one object and get all of the data into it.

Now we can extract the columns we want from skycoord_gd1

and add them as columns in the Astropy Table

polygon_results. phi1 and phi2

contain the transformed coordinates.

PYTHON

polygon_results['phi1'] = skycoord_gd1.phi1

polygon_results['phi2'] = skycoord_gd1.phi2

polygon_results.info()OUTPUT

<Table length=140339>

name dtype unit description class

--------- ------- -------- ------------------------------------------------------------------ ------------

source_id int64 Unique source identifier (unique within a particular Data Release) MaskedColumn

ra float64 deg Right ascension MaskedColumn

dec float64 deg Declination MaskedColumn

pmra float64 mas / yr Proper motion in right ascension direction MaskedColumn

pmdec float64 mas / yr Proper motion in declination direction MaskedColumn

parallax float64 mas Parallax MaskedColumn

phi1 float64 deg Column

phi2 float64 deg Columnpm_phi1_cosphi2 and pm_phi2 contain the

components of proper motion in the transformed frame.

PYTHON

polygon_results['pm_phi1'] = skycoord_gd1.pm_phi1_cosphi2

polygon_results['pm_phi2'] = skycoord_gd1.pm_phi2

polygon_results.info()OUTPUT

<Table length=140339>

name dtype unit description class

--------- ------- -------- ------------------------------------------------------------------ ------------

source_id int64 Unique source identifier (unique within a particular Data Release) MaskedColumn

ra float64 deg Right ascension MaskedColumn

dec float64 deg Declination MaskedColumn

pmra float64 mas / yr Proper motion in right ascension direction MaskedColumn

pmdec float64 mas / yr Proper motion in declination direction MaskedColumn

parallax float64 mas Parallax MaskedColumn

phi1 float64 deg Column

phi2 float64 deg Column

pm_phi1 float64 mas / yr Column

pm_phi2 float64 mas / yr ColumnDetail If you notice that SkyCoord has an attribute

called proper_motion, you might wonder why we are not using

it.

We could have: proper_motion contains the same data as

pm_phi1_cosphi2 and pm_phi2, but in a

different format.

Pandas DataFrames versus Astropy

Tables

Two common choices are the Pandas DataFrame and Astropy

Table. Pandas DataFrames and Astropy

Tables share many of the same characteristics and most of

the manipulations that we do can be done with either. As you become more

familiar with each, you will develop a sense of which one you prefer for

different tasks. For instance you may choose to use Astropy

Tables to read in data, especially astronomy specific data

formats, but Pandas DataFrames to inspect the data.

Fortunately, Astropy makes it easy to convert between the two data

types. We will choose to use Pandas DataFrame, for two

reasons:

It provides capabilities that are (almost) a superset of the other data structures, so it is the all-in-one solution.

Pandas is a general-purpose tool that is useful in many domains, especially data science. If you are going to develop expertise in one tool, Pandas is a good choice.

However, compared to an Astropy Table, Pandas has one

big drawback: it does not keep the metadata associated with the table,

including the units for the columns. Nevertheless, we think it’s a

useful data type to be familiar with.

It is straightforward to convert an Astropy Table to a

Pandas DataFrame.

DataFrame provides shape, which shows the

number of rows and columns.

OUTPUT

(140339, 10)It also provides head, which displays the first few

rows. head is useful for spot-checking large results as you

go along.

OUTPUT

source_id ra dec pmra pmdec parallax phi1 phi2 pm_phi1 pm_phi2

0 637987125186749568 142.483019 21.757716 -2.516838 2.941813 -0.257345 -54.975623 -3.659349 6.429945 6.518157

1 638285195917112960 142.254529 22.476168 2.662702 -12.165984 0.422728 -54.498247 -3.081524 -3.168637 -6.206795

2 638073505568978688 142.645286 22.166932 18.306747 -7.950660 0.103640 -54.551634 -3.554229 9.129447 -16.819570

3 638086386175786752 142.577394 22.227920 0.987786 -2.584105 -0.857327 -54.536457 -3.467966 3.837120 0.526461

4 638049655615392384 142.589136 22.110783 0.244439 -4.941079 0.099625 -54.627448 -3.542738 1.466103 -0.185292

Attributes vs functions

shape is an attribute, so we display its value without

calling it as a function.

head is a function, so we need the parentheses.

Before we go any further, we will take all of the steps that we have

done and consolidate them into a single function that we can use to take

the coordinates and proper motion that we get as an Astropy

Table from our Gaia query, add columns representing the

reflex corrected GD-1 coordinates and proper motions, and transform it

into a Pandas DataFrame. This is a general function that we

will use multiple times as we build different queries so we want to

write it once and then call the function rather than having to copy and

paste the code over and over again.

PYTHON

def make_dataframe(table):

"""Transform coordinates from ICRS to GD-1 frame.

table: Astropy Table

returns: Pandas DataFrame

"""

#Create a SkyCoord object with the coordinates and proper motions

# in the input table

skycoord = SkyCoord(

ra=table['ra'],

dec=table['dec'],

pm_ra_cosdec=table['pmra'],

pm_dec=table['pmdec'],

distance=8*u.kpc,

radial_velocity=0*u.km/u.s)

# Define the GD-1 reference frame

gd1_frame = GD1Koposov10()

# Transform input coordinates to the GD-1 reference frame

transformed = skycoord.transform_to(gd1_frame)

# Correct GD-1 coordinates for solar system motion around galactic center

skycoord_gd1 = reflex_correct(transformed)

#Add GD-1 reference frame columns for coordinates and proper motions

table['phi1'] = skycoord_gd1.phi1

table['phi2'] = skycoord_gd1.phi2

table['pm_phi1'] = skycoord_gd1.pm_phi1_cosphi2

table['pm_phi2'] = skycoord_gd1.pm_phi2

# Create DataFrame

df = table.to_pandas()

return dfHere is how we use the function:

Saving the DataFrame

At this point we have run a successful query and combined the results

into a single DataFrame. This is a good time to save the

data.

To save a Pandas DataFrame, one option is to convert it

to an Astropy Table, like this:

PYTHON

from astropy.table import Table

results_table = Table.from_pandas(results_df)

type(results_table)OUTPUT

astropy.table.table.TableThen we could write the Table to a FITS file, as we did

in the previous lesson.

But, like Astropy, Pandas provides functions to write DataFrames in

other formats; to see what they are find

the functions here that begin with to_.

One of the best options is HDF5, which is Version 5 of Hierarchical Data Format.

HDF5 is a binary format, so files are small and fast to read and write (like FITS, but unlike XML).

An HDF5 file is similar to an SQL database in the sense that it can contain more than one table, although in HDF5 vocabulary, a table is called a Dataset. (Multi-extension FITS files can also contain more than one table.)

And HDF5 stores the metadata associated with the table, including column names, row labels, and data types (like FITS).

Finally, HDF5 is a cross-language standard, so if you write an HDF5 file with Pandas, you can read it back with many other software tools (more than FITS).

We can write a Pandas DataFrame to an HDF5 file like

this:

Because an HDF5 file can contain more than one Dataset, we have to provide a name, or “key”, that identifies the Dataset in the file.

We could use any string as the key, but it is generally a good

practice to use a descriptive name (just like your

DataFrame variable name) so we will give the Dataset in the

file the same name (key) as the DataFrame.

By default, writing a DataFrame appends a new dataset to

an existing HDF5 file. We will use the argument mode='w' to

overwrite the file if it already exists rather than append another

dataset to it.

Summary

In this episode, we re-loaded the Gaia data we saved from a previous query.

We transformed the coordinates and proper motion from ICRS to a frame

aligned with the orbit of GD-1, stored the results in a Pandas

DataFrame, and visualized them.

We combined all of these steps into a single function that we can reuse in the future to go straight from the output of a query with object coordinates in the ICRS reference frame directly to a Pandas DataFrame that includes object coordinates in the GD-1 reference frame.

We saved our results to an HDF5 file which we can use to restart the analysis from this stage or verify our results at some future time.

- When you make a scatter plot, adjust the size of the markers and their transparency so the figure is not overplotted; otherwise it can misrepresent the data badly.

- For simple scatter plots in Matplotlib,

plotis faster thanscatter. - An Astropy

Tableand a PandasDataFrameare similar in many ways and they provide many of the same functions. They have pros and cons, but for many projects, either one would be a reasonable choice. - To store data from a Pandas

DataFrame, a good option is an HDF5 file, which can contain multiple Datasets (we’ll dig in more in the Join lesson).

Content from Plotting and Pandas

Last updated on 2025-12-12 | Edit this page

Overview

Questions

- How to efficiently explore our data and identify appropriate filters to produce a clean sample (in this case of GD-1 stars)?

Objectives

- Use a Boolean Pandas

Seriesto select rows in aDataFrame. - Save multiple

DataFrames in an HDF5 file.

In the previous episode, we wrote a query to select stars from the region of the sky where we expect GD-1 to be, and saved the results in a FITS and HDF5 file.

Now we will read that data back in and implement the next step in the analysis, identifying stars with the proper motion we expect for GD-1.

Outline

We will put those results into a Pandas

DataFrame, which we will use to select stars near the centerline of GD-1.Plotting the proper motion of those stars, we will identify a region of proper motion for stars that are likely to be in GD-1.

Finally, we will select and plot the stars whose proper motion is in that region.

Starting from this episode

If you are starting a new notebook for this episode, expand this section for information you will need to get started.

Previously, we ran a query on the Gaia server, downloaded data for

roughly 140,000 stars, transformed the coordinates to the GD-1 reference

frame, and saved the results in an HDF5 file (Dataset name

results_df). We will use that data for this episode.

Whether you are working from a new notebook or coming back from a

checkpoint, reloading the data will save you from having to run the

query again.

If you are starting this episode here or starting this episode in a new notebook, you will need to run the following lines of code.

This imports previously imported functions:

PYTHON

import astropy.units as u

import matplotlib.pyplot as plt

import pandas as pd

from episode_functions import *The following code loads in the data (instructions for downloading

data can be found in the setup instructions).

You may need to add a the path to the filename variable below

(e.g. filename = 'student_download/backup-data/gd1_data.hdf')

Exploring data

One benefit of using Pandas is that it provides functions for

exploring the data and checking for problems. One of the most useful of

these functions is describe, which computes summary

statistics for each column.

OUTPUT

source_id ra dec pmra \

count 1.403390e+05 140339.000000 140339.000000 140339.000000

mean 6.792399e+17 143.823122 26.780285 -2.484404

std 3.792177e+16 3.697850 3.052592 5.913939

min 6.214900e+17 135.425699 19.286617 -106.755260

25% 6.443517e+17 140.967966 24.592490 -5.038789

50% 6.888060e+17 143.734409 26.746261 -1.834943

75% 6.976579e+17 146.607350 28.990500 0.452893

max 7.974418e+17 152.777393 34.285481 104.319923

pmdec parallax phi1 phi2 \

[Output truncated]Exercise (10 minutes)

Review the summary statistics in this table.

Do the values make sense based on what you know about the context?

Do you see any values that seem problematic, or evidence of other data issues?

The most noticeable issue is that some of the parallax values are negative, which seems non-physical.

Negative parallaxes in the Gaia database can arise from a number of causes like source confusion (high negative values) and the parallax zero point with systematic errors (low negative values).

Fortunately, we do not use the parallax measurements in the analysis (one of the reasons we used constant distance for reflex correction).

Plot proper motion

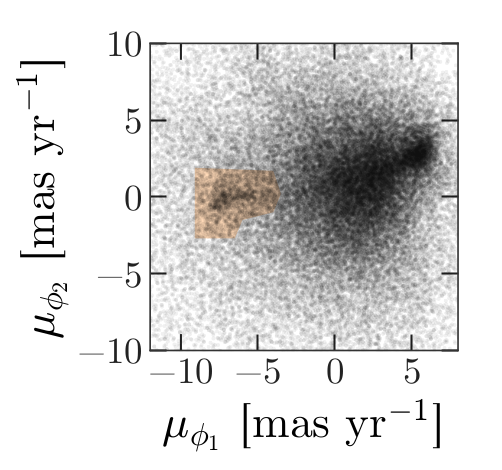

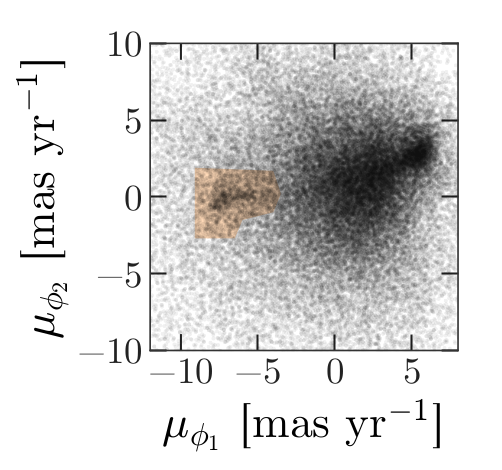

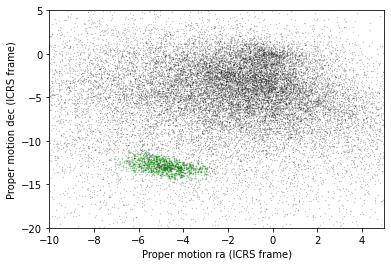

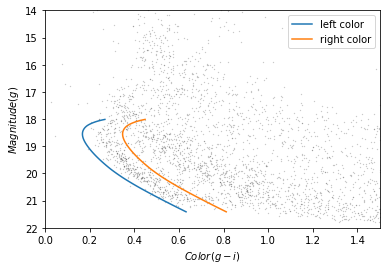

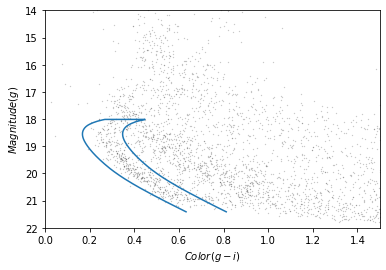

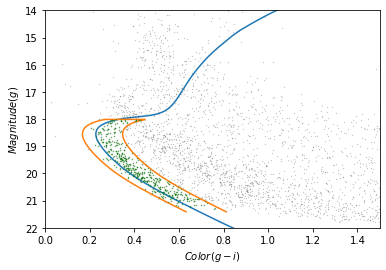

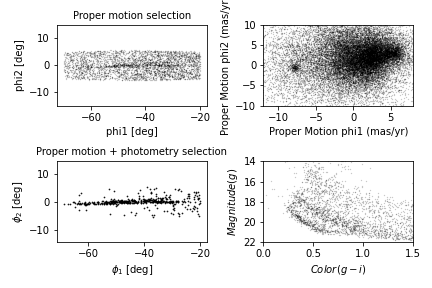

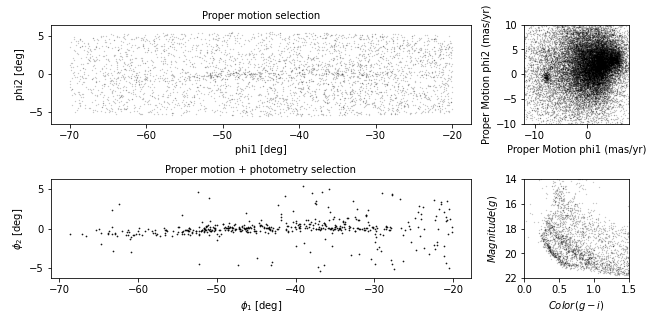

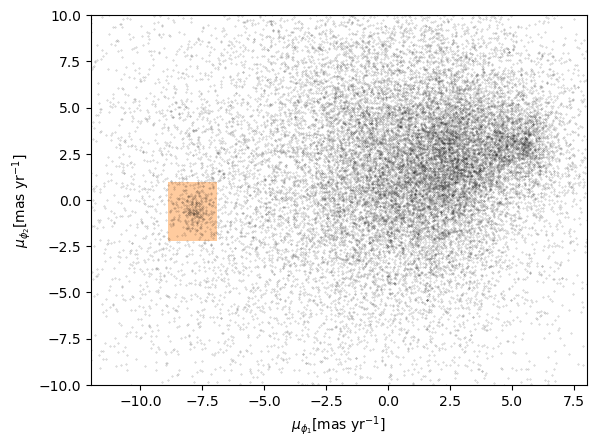

Now we are ready to replicate one of the panels in Figure 1 of the Price-Whelan and Bonaca paper, the one that shows components of proper motion as a scatter plot:

In this figure, the shaded area identifies stars that are likely to be in GD-1 because:

Due to the nature of tidal streams, we expect the proper motion for stars in GD-1 to be along the axis of the stream; that is, we expect motion in the direction of

phi2to be near 0.In the direction of

phi1, we do not have a prior expectation for proper motion, except that it should form a cluster at a non-zero value.

By plotting proper motion in the GD-1 frame, we hope to find this cluster. Then we will use the bounds of the cluster to select stars that are more likely to be in GD-1.

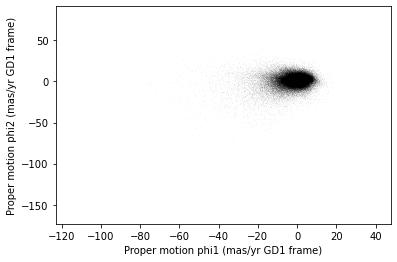

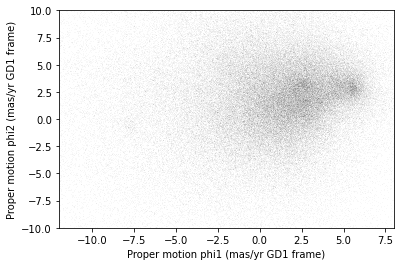

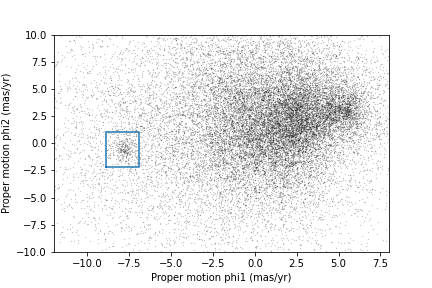

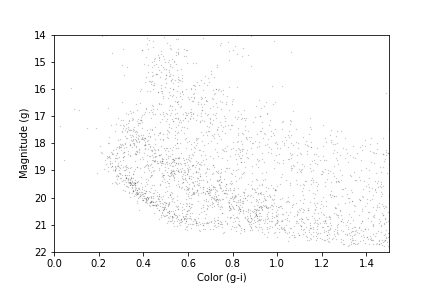

The following figure is a scatter plot of proper motion, in the GD-1

frame, for the stars in results_df.

PYTHON

x = results_df['pm_phi1']

y = results_df['pm_phi2']

plt.plot(x, y, 'ko', markersize=0.1, alpha=0.1)

plt.xlabel('Proper motion phi1 (mas/yr GD1 frame)')

plt.ylabel('Proper motion phi2 (mas/yr GD1 frame)')OUTPUT

<Figure size 432x288 with 1 Axes>

Most of the proper motions are near the origin, but there are a few

extreme values. Following the example in the paper, we will use

xlim and ylim to zoom in on the region near

the origin.

PYTHON

x = results_df['pm_phi1']

y = results_df['pm_phi2']

plt.plot(x, y, 'ko', markersize=0.1, alpha=0.1)

plt.xlabel('Proper motion phi1 (mas/yr GD1 frame)')

plt.ylabel('Proper motion phi2 (mas/yr GD1 frame)')

plt.xlim(-12, 8)

plt.ylim(-10, 10)OUTPUT

<Figure size 432x288 with 1 Axes>

There is a hint of an overdense region near (-7.5, 0), but if you did not know where to look, you would miss it.

To see the cluster more clearly, we need a sample that contains a higher proportion of stars in GD-1. We will do that by selecting stars close to the centerline.

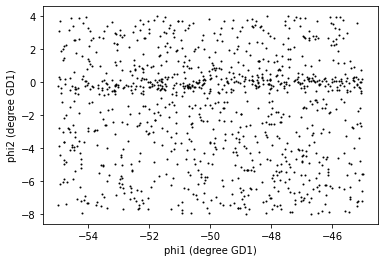

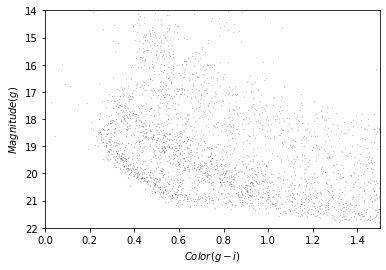

Selecting the centerline

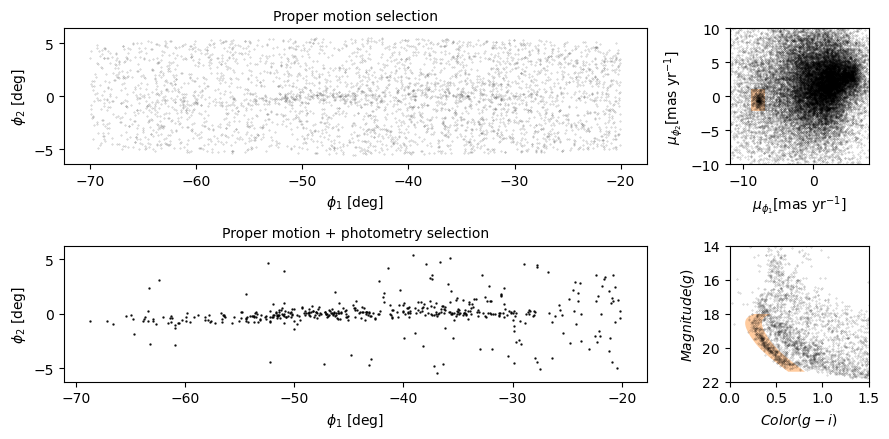

As we can see in the following figure, many stars in GD-1 are less

than 1 degree from the line phi2=0.

Stars near this line have the highest probability of being in GD-1.

To select them, we will use a “Boolean mask”. We will start by

selecting the phi2 column from the

DataFrame:

OUTPUT

pandas.core.series.SeriesThe result is a Series, which is the structure Pandas

uses to represent columns.

We can use a comparison operator, >, to compare the

values in a Series to a constant.

OUTPUT

pandas.core.series.SeriesThe result is a Series of Boolean values, that is,

True and False.

OUTPUT

0 False

1 False

2 False

3 False

4 False

Name: phi2, dtype: boolTo select values that fall between phi2_min and

phi2_max, we will use the & operator,

which computes “logical AND”. The result is true where elements from

both Boolean Series are true.

Logical operators

Python’s logical operators (and, or, and

not) do not work with NumPy or Pandas. Both libraries use

the bitwise operators (&, |, and

~) to do elementwise logical operations (explanation

here).

Also, we need the parentheses around the conditions; otherwise the order of operations is incorrect.

The sum of a Boolean Series is the number of

True values, so we can use sum to see how many

stars are in the selected region.

OUTPUT

np.int64(25084)A Boolean Series is sometimes called a “mask” because we

can use it to mask out some of the rows in a DataFrame and

select the rest, like this:

OUTPUT

pandas.core.frame.DataFramecenterline_df is a DataFrame that contains

only the rows from results_df that correspond to

True values in mask. So it contains the stars

near the centerline of GD-1.

We can use len to see how many rows are in

centerline_df:

OUTPUT

25084And what fraction of the rows we have selected.

OUTPUT

0.1787386257562046There are about 25,000 stars in this region, about 18% of the total.

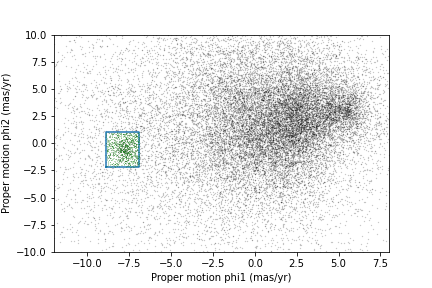

Plotting proper motion

This is the second time we are plotting proper motion, and we can imagine we might do it a few more times. Instead of copying and pasting the previous code, we will write a function that we can reuse on any dataframe.

PYTHON

def plot_proper_motion(df):

"""Plot proper motion.

df: DataFrame with `pm_phi1` and `pm_phi2`

"""

x = df['pm_phi1']

y = df['pm_phi2']

plt.plot(x, y, 'ko', markersize=0.3, alpha=0.3)

plt.xlabel('Proper motion phi1 (mas/yr)')

plt.ylabel('Proper motion phi2 (mas/yr)')

plt.xlim(-12, 8)

plt.ylim(-10, 10)And we can call it like this:

OUTPUT

<Figure size 432x288 with 1 Axes>

Now we can see more clearly that there is a cluster near (-7.5, 0).

You might notice that our figure is less dense than the one in the paper. That is because we started with a set of stars from a relatively small region. The figure in the paper is based on a region about 10 times bigger.

In the next episode we will go back and select stars from a larger region. But first we will use the proper motion data to identify stars likely to be in GD-1.

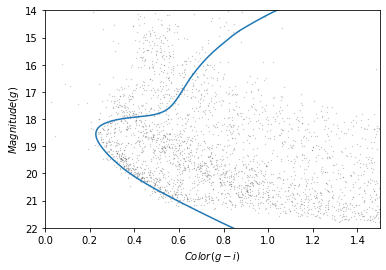

Filtering based on proper motion

The next step is to select stars in the “overdense” region of proper motion, which are candidates to be in GD-1.

In the original paper, Price-Whelan and Bonaca used a polygon to cover this region, as shown in this figure.

We will use a simple rectangle for now, but in a later lesson we will see how to select a polygonal region as well.

Here are bounds on proper motion we chose by eye:

To draw these bounds, we will use the make_rectangle

function we wrote in episode 2 to make two lists containing the

coordinates of the corners of the rectangle.

Here is what the plot looks like with the bounds we chose.

OUTPUT

<Figure size 432x288 with 1 Axes>

Now that we have identified the bounds of the cluster in proper

motion, we will use it to select rows from results_df.

We will use the following function, which uses Pandas operators to

make a mask that selects rows where series falls between

low and high.

PYTHON

def between(series, low, high):

"""Check whether values are between `low` and `high`."""

return (series > low) & (series < high)The following mask selects stars with proper motion in the region we chose.

PYTHON

pm1 = results_df['pm_phi1']

pm2 = results_df['pm_phi2']

pm_mask = (between(pm1, pm1_min, pm1_max) &

between(pm2, pm2_min, pm2_max))Again, the sum of a Boolean series is the number of TRUE

values.

OUTPUT

np.int64(1049)Now we can use this mask to select rows from

results_df.

OUTPUT